Augmented Reality#2: Is that picture level?

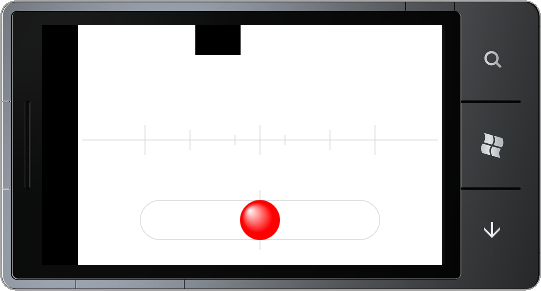

A while ago I wrote a post about creating a simple spirit-level using the Accelerometer in Windows Phone 7. In the Mango release a number of things have changed with the way the Accelerometer API is accessed and how its’ results are returned. There has also been one huge new feature released in Mango that takes the simple spirit-level app to a whole new level. Of course I am speaking about the VideoBrush control, which enables access to the live video feed from the device.

As I pondered potential uses of Augmented Reality, my mind drifted back to the spirit-level application and I began to think about how useful it would be to be able to stand back from an object and determine if it was level. I mean, you have to admit it is easier to stand back from your newly hung photo frame and check that it is level than to simply guess. Ok, you may be able to place your trusty spirit-level (or WP7) against a photo frame and test that it is level, but what about when you are standing in the Louvre and suddenly realise that the Mona Lisa looks a little crocked? I don’t think those burly security guards are going to let you walk up and put your phone on top of the frame.

The first thing you will notice when dealing with the Accelerometer in Mango is that there is no ReadingChanged event. According to MSDN, this has been replaced by the CurrentValueChanged event. The next thing you’ll notice is that the CurrentValueChanged event expects a SensorReadingEventArgs parameter instead of the AccelerometerReadingEventArgs parameter we utilised in our code for Windows Phone 7. In the Mango release, Microsoft have decided to standardise the way developers access the ever increasing range of sensors – including the Accelerometer, Compass, Gyroscope and Motion.

//display the video feed

PhotoCamera m_camera = new PhotoCamera();

this.videoBrush.SetSource(m_camera);

//start the Accelerometer

sensor.CurrentValueChanged +=new EventHandler<SensorReadingEventArgs<AccelerometerReading>>(sensor_CurrentValueChanged);

sensor.Start();

Thankfully, all of these changes result in only a handful of changes to the original Windows Phone 7.0 code. One thing the Mango 7.5 code takes advantage is, especially when dealing with 3D objects, is the XNA framework. This is evident by that fact that the EventArgs returned by the CurrentValueChanged event no longer refer to X,Y,Z, but to a XNA object called Vector3 (Microsoft.Xna.Framework.Vector3). Again, a small change to our previous code enables us to visualise in which direction the device is tilted, just like a real spirit-level.

void sensor_CurrentValueChanged(object sender, SensorReadingEventArgs<AccelerometerReading> e)

{

Dispatcher.BeginInvoke(() => MyReadingChanged(e));

}

void MyReadingChanged(SensorReadingEventArgs<AccelerometerReading> e)

{

//use the XNA Vector3 object to access X,Y,Z

Microsoft.Xna.Framework.Vector3 moved = e.SensorReading.Acceleration;

BallTransform.X = -e.SensorReading.Acceleration.Y * (Track.Width - Ball.Width);

}

Augmented Reality#1: Display The Live Video Feed

There are loads of interesting Augmented Reality (AR) apps doing the rounds on all smartphone platforms and now, Microsoft has given developers all the tools they need to build great AR apps for the Mango release of Windows Phone.

The first step in creating the next big augmented reality app is to display the live video feed. Before the Mango release, the only interaction developers had with the camera was using the CameraCaptureTask, which I covered in an earlier post. The CameraCaptureTask only lets you start the built-in camera app and wait for it to send back the results to your app.

With the new features in Mango, you can access the feed directly from the camera, with only a few lines of code. All you need to do is set the source of the VideoBrush control to the devices camera.

To start, add a Rectangle to your MainPage XAML and set it’s Fill to a VideoBrush.

<Rectangle>

<Rectangle.Fill>

<VideoBrush x:Name="videoBrush"/>

</Rectangle.Fill>

</Rectangle>

In the code behind, add a using statement for Microsoft.Devices and the in either the Loaded or OnNavigatedTo method, set the source of the VideoBrush to the PhotoCamera class.

void MainPage_Loaded(object sender, RoutedEventArgs e)

{

PhotoCamera m_camera = new PhotoCamera();

this.videoBrush.SetSource(m_camera);

}

Compile and run the app in the emulator and you’ll see an exciting black square moving around on a white background. Don’t worry, on a real device you will see the actual camera feed.

Now, if you are lucky enough to have a real Windows Phone 7 device and you have upgraded to Mango, you will find the results were less than ideal. In fact, the results were about 90 degrees off ideal! Yes that’s right, the code that everyone is flogging off as being able to display the live camera feed displays the resulting video 90 degrees off what it should be. It’s all very interesting and art-nouveau, but in terms of AR apps, it’s rubbish.

Thankfully it’s very easy to fix the problem. All you need to do is apply a transformation to the VideoBrush used to fill the Rectangle.

<Rectangle>

<Rectangle.Fill>

<VideoBrush x:Name="videoBrush">

<VideoBrush.RelativeTransform>a

<CompositeTransform CenterX="0.5" CenterY="0.5" Rotation="90" />

</VideoBrush.RelativeTransform>

</VideoBrush>

</Rectangle.Fill>

</Rectangle>

This will rotate the camera feed so that what you expect to see through the viewfinder is actually what you get.

This is of course only the first step to creating a fully fledge AR application that will combine the digital and real world in ways you only dreamed of, but it is a huge step forward compared to the old CameraCaptureTask days.

This code is not only useful for augmented reality, but with a small amount of work you can use any number of the existing launcher and chooser tasks in WP7 to create apps that show that real-world camera feed behind whatever tasks you need to perform.

In the next few posts we’ll look at building a simple email and sms tool that uses the camera feed so that users can see where they are going while writing that all important email.